Recommended Parameters for NIFI and Elasticsearch

You can run your Elasticsearch and NiFi environments using the default settings, which provides a minimal resource set. For best performance, tune your configuration or use the recommended parameters for CPU, memory and system resources.

System Resources, memory footprint and CPU allocation for Elasticsearch and NiFi

The recommended (or minimal) environment consists of multiple nodes that host the Elasticsearch and NiFi pods. After an initial installation, by default the system has three Elasticsearch Kubernetes pods and one Nifi pod. Each pod is a group of Docker containers with shared namespaces and shared filesystem volumes.

Each pod's minimal setup includes 6 vCPUs and 10 GB of RAM. In the recommended configuration, each of the pods has access to 16 virtual CPUs and at least 16 GB heap space.

It is possible that certain resources will need to be adjusted or overcommitted. If so, extra testing to confirm the configuration will be necessary to assure stability and operability.

| Pods | vCPUs/Elasticsearch Pod | vCPU/NiFi Pod | Elasticsearch heap | NIFI heap | |

|---|---|---|---|---|---|

| Minimal Configuration (default) |

3 - Elasticsearch 1- Nifi |

6 | 6 | 12 | 9 |

| Recommended Configuration |

3 - Elasticsearch 1- Nifi |

16 | 16 | 16 | 12 |

NiFi

The throughput achieved in the NiFI/Elasticsearch cluster determines the speed with which data is processed and indexes are created. NiFi processor threads and bucket size are two factors that can increase and optimise performance for a given hardware footprint.

Threads

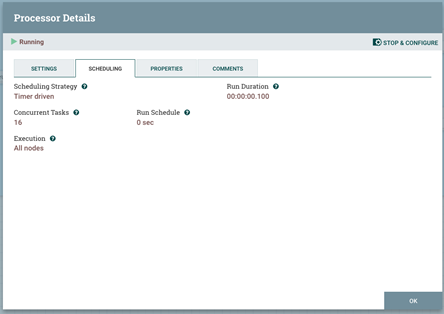

In NiFi, each processor has the capacity to process flowfiles in parallel mode. To do this, either allocate more threads to the CPU or increase the concurrent jobs variable above 1. The NLP processor in the following snapshot is configured to 16 concurrent tasks, which corresponds to the number of vCPUs available on the node.

The processor throughput will be improved by increasing the threads on the CPU. Additional threads would increase the Processor bandwidth by the factor of the number of threads, if the processor is doing computational processing (i.e. we can experience linear scaling).

In case of the I/O operations, the Processor will experience some improvement that would start to diminish after certain level of threads is set (i.e., nonlinear scalability that would end in saturation).

Bucket Size

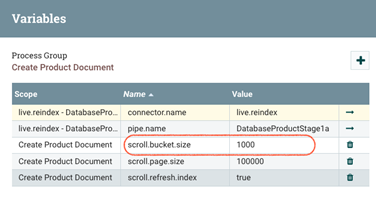

Another option that may be changed to increase processor bandwidth is bucket size (scroll.bucket.size). By raising the bucket size, you increase the amount of the chunk of data that will be processed by a single processor as a group.

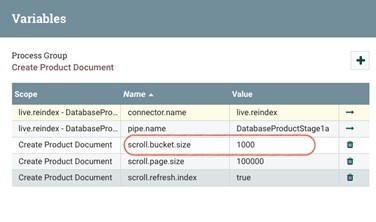

The variable name for bucket size changes is not immediately obvious. In HCL Commerce Versions 9.1.6 forward, you can find it in the processor group's second level.

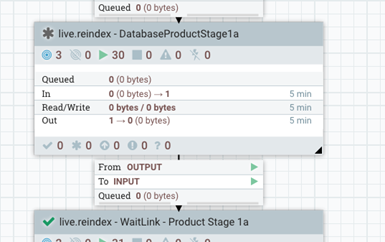

- The top level looks like this:

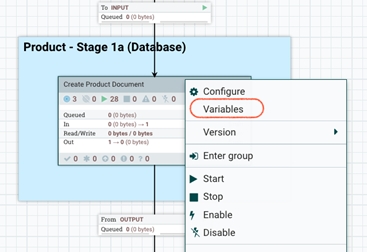

- Drill one level down, right-click, and choose "variables":

- And change the bucket size value:

Elasticsearch

Indices Refresh Rate

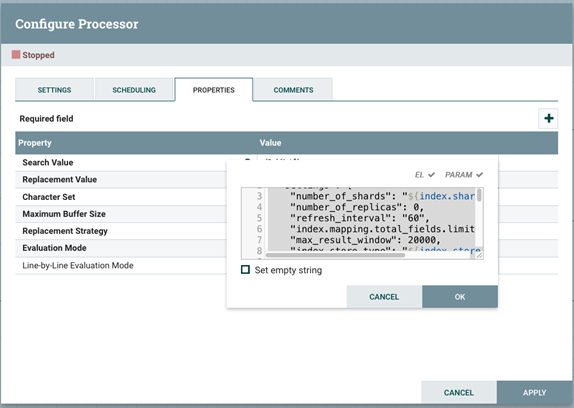

Elasticsearch refreshes indices every second by default, but only on indices that have had one or more search requests in the previous thirty seconds. This period is set to ten seconds by default by the commerce index schema. However, if it is viable, disable it and set the period to -1 or a much longer time (for example, to a sixty second refresh interval).

To set up a custom value, follow the workflow

Indexing Buffer Sizes

Indexing buffer sizes will help to speed up the indexing operation and increasing their size will improve the overall index building speed. The value is set in indices.memory.index_buffer_size variable and usually is set to 10% of the heap size OTB.

It is advisable to set it higher, to 20% of the heap size.

For more information about Elasticsearch indexing refresh intervals, see the Elasticsearch Guide.