Component Pack overview

Review the underlying architecture and technologies used by the Component Pack for HCL Connections™.

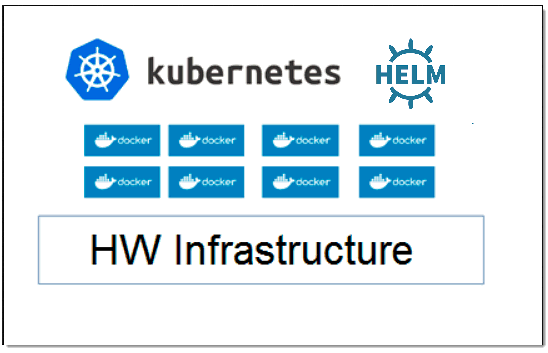

Component Pack for HCL Connections™ is both designed and deployed upon a different software stack from Connections 6.0. Therefore, it requires a separate hardware infrastructure from HCL Connections™ 6.0. Its architecture consists of three main components:

- Container Manager

(Docker)

Using containers, everything required to make a piece of software run is packaged into isolated containers. Unlike VMs, containers do not bundle a full operating system - only libraries and settings required to make the software work are needed. This makes for efficient, lightweight, self-contained systems and guarantees that software will always run the same, regardless of where it’s deployed.

- Container orchestrator

(Kubernetes)

Kubernetes is an open-source platform for automating deployment, scaling, and operations of application containers across clusters of hosts, providing container-centric infrastructure.

- Package Manager for Kubernetes

(Helm)

Helm helps you manage Kubernetes applications — Helm Charts helps you define, install, and upgrade even the most complex Kubernetes application.

This diagram illustrates the architecture of the Component Pack.

The architecture components are deployed across a range of nodes from a single VM (for evaluation purpose only) to multiple VMs (depending on scale required). During the installation you will need to provide an IPv4 address for each node. Table 1 describes the artifacts that might be required in your deployment.

| Artifact | Description |

|---|---|

|

Master node |

A master node provides management services and controls the worker nodes in a cluster. Master nodes host processes that are responsible for resource allocation, state maintenance, scheduling, and monitoring. Note: Multiple master nodes are required in a high availability (HA) environment to allow for

failover if the leading master host fails. Deploy a load balancer to route requests to the

api-server onto a master node in a round-robin fashion. If the leading master fails, requests

continue to be routed to an available master through the load balancer.

|

|

Worker node |

A worker node is a node that provides a containerized environment for running tasks. As demands increase, more worker nodes can easily be added to your cluster to improve performance and efficiency. A cluster can contain any number of worker nodes, but a minimum of one worker node is required. There are two types of worker nodes for Component Pack: generic worker and infrastructure worker. Infrastructure Worker: Worker node that will host Elasticsearch and Elasic Stack pods only Generic Worker: Worker node that will host the remaining Component Pack pods |

|

Load Balancer (HA only) |

There are many configurations for load balancers, including HAProxy, NGINX, and others. Your cluster requirements might need a different configuration. |

|

Storage (if using external storage) |

A storage node will contain persistent storage for applications deployed by Component Pack. Data is permanently stored, even after a pod/machine restart. In an HA environment, it is recommended to have a dedicated storage node. For other deployment types, storage can reside on the Kubernetes master. |

|

Reverse proxy (for Customizer) |

Optimize your configuration to only send traffic from the pages you want to customize, so that the reverse proxy server redirects that traffic to Customizer. |

|

Device mapper |

It is recommended that all deployment types (including proof-of-concept deployments) leverage a device mapper for Docker storage. Device Mapper storage driver:

For more information, see the Docker article, Use the Device Mapper storage driver. |

- Apache ZooKeeper enables highly reliable, distributed coordination of group services used by an application.

- MongoDB is an open-source database that uses a document-oriented model rather than a relational data model.

- Redis is an open-source (BSD licensed), in-memory data structure store, used as a database, cache, and message broker. Redis Sentinel is used for High Availability.

- Solr provides distributed indexing, replication and load-balanced querying, automated failover and recovery, and centralized configuration.

- Elasticsearch is a search engine based on Lucene. It provides a distributed, multitenant-capable, full-text search engine with an HTTP web interface and schema-free JSON documents.

- Elastic Stack is a collection of open-source tools that collect log data and help you visualize those logs in a central location. Specific tools in the Elastic Stack include Kibana, Logstash, FileBeat, and Elasticsearch Curator.

- HAProxy is an open-source load-balancing and proxying solution for TCP-based and HTTP-based applications.

- NGINX Ingress Controller is built around the Kubernetes Ingress resource, using a ConfigMap to store the NGINX configuration. Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster.