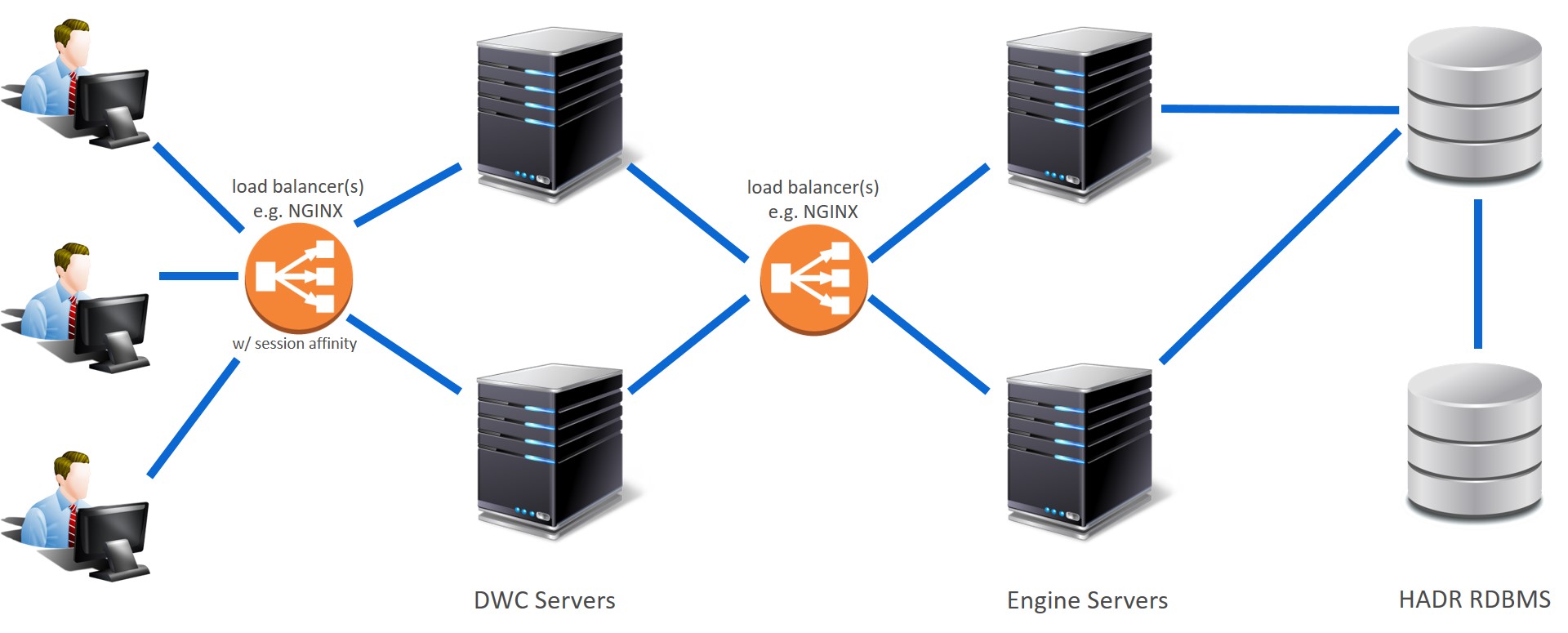

An active-active high availability scenario

Implement active-active high availability between the Dynamic Workload Console and the master domain manager so that a switch to a backup is transparent to Dynamic Workload Console users.

Use a load balancer between the Dynamic Workload Console servers and the master domain manager so that in the event the master needs to switch to a backup, the switch is transparent to console users.

Configure the master domain manager and backup master domain managers behind a second load balancer so that the workload is balanced across all backup master domain managers and the master domain manager. Load balancing distributes workload requests across all configured nodes to avoid any single node from being overloaded and avoids a single point of failure.

You might already have installed and configured a number of backup master domain managers, in addition to your master domain manager, that you use for dedicated operations and to alleviate the load on the master domain manager. For example, you might have one dedicated to monitoring activities, another for event management, and perhaps your scheduling activities are run on the master domain manager. Administrators must create engine connections for each of these and users have to switch between engines to run dedicated operations. Should one of them go down, users need to be notified about which engine to use as a replacement and switch to the replacement engine.

To simply this, configure a load balancer in front of the master domain manager and backup master domain managers so that users are unaware of when a switch occurs and administrators configure a single engine connection in single-sign on that points to the name or IP address and port number of the load balancer and not ever need to know the hostname of the current active master. The load balancer monitors the engine nodes and takes over the task of balancing the workload and the switch to a backup master domain manager is completely transparent to console instance users. Any backup master domain manager can satisfy HTTP requests, even those that can be satisfied only by the active master, such as requests on the plan, because the requests are proxied to and from the active master.

<properties.db2.jcc

databaseName="TWS"

user="…"

password="…"

serverName="MyMaster"

portNumber="50000"

clientRerouteAlternateServerName="MyBackup"

clientRerouteAlternatePortNumber="50000"

retryIntervalForClientReroute="3000"

maxRetriesForClientReroute="100"

/>

- High availability

- The load balancer monitors the nodes and takes care of balancing the workload across the nodes, eliminating the possibility of a node creating a single point of failure.

- Scalability

- As client requests increase, you can add additional backup master domain manager nodes to the configuration to support the increased workload.

- User-friendly

- Users are unaware of when a switch occurs to a different node. Administrators do not have to worry about creating new engine connections to run workloads on a different node.

- Low overhead

- This configuration does not require any manual intervention when nodes become unavailable. Additional flexibility is provided to console instance users who no longer have to run certain operations on dedicated nodes.

- Optimization of hardware

- Load balancing distributes session requests across multiple servers thereby utilizing hardware resources equally.

- Install a load balancer on a workstation either within the HCL Workload Automation environment or external to the environment. Ports must be open between the load balancer and the Dynamic Workload Console nodes and the engine nodes.

- Configure multiple Dynamic Workload Console instances in a cluster where the consoles share the same repository settings and a load balancer takes care of dispatching and redirecting connections among the nodes in the cluster. The load balancer must support session affinity.See Configuring High Availability

- Exchange certificates between the load balancer and the Dynamic Workload Console and between the second load balancer and the master domain manager and backup master domain manager nodes.

- Configure the load balancer configuration file with details about the Dynamic Workload Console, master domain manager, and backup master domain managers. The configuration file indicates which nodes (Dynamic Workload Console, master domain manager, and backup master domain managers) are available and the routes to be used to dispatch client calls to the Dynamic Workload Console server nodes.

- Configure an engine connection that points to the name or IP address of the load balancer, and specify the incoming port number to the load balancer that corresponds to the outgoing port number to the master (default port number 31116). The load balancer must point to the HTTPS of the Dynamic Workload Console and the HTTPS of the master domain manager.

- Configure an RDBMS in high availability and enable the HADR feature.

- To configure the Dynamic Workload Console nodes in a

cluster behind the load balancer, modify the ports_config.xml

file as

follows:

<httpEndpoint host="${httpEndpoint.host}" httpPort="${host.http.port}" httpsPort="${host.https.port}" id="defaultHttpEndpoint"> <httpOptions removeServerHeader="true"/> <remoteIp useRemoteIpInAccessLog="true"/> </httpEndpoint>

user nginx;

worker_processes 10; ## Default: 1

worker_rlimit_nofile 8192;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 4096; ## Default: 1024

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

upstream wa_console { ##DWC configuration

ip_hash;

server DWC1_HOSTNAME:DWC1_PORT max_fails=3 fail_timeout=300s;

server DWC2_HOSTNAME:DWC2_PORT max_fails=3 fail_timeout=300s;

keepalive 32;

}

upstream wa_server_backend_https {

server MDM1_HOSTNAME:MDM1_PORT weight=1;

server MDM2_HOSTNAME:MDM2_PORT weight=1;

}

server{

listen 443 ssl;

ssl_certificate /etc/nginx/certs/nginx.crt;

ssl_certificate_key /etc/nginx/certs/nginxkey.key;

ssl_trusted_certificate /etc/nginx/certs/ca-certs.crt;

location /

{

proxy_pass https://wa_console;

proxy_cache off;

proxy_set_header Host $host;

proxy_set_header Forwarded " $proxy_add_x_forwarded_for;proto=$scheme";

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-Port 443;

}

}

server{

listen 9443 ssl;

ssl_certificate /etc/nginx/certs/nginx.crt;

ssl_certificate_key /etc/nginx/certs/nginxkey.key;

ssl_trusted_certificate /etc/nginx/certs/ca-certs.crt;

location /

{

proxy_pass https://wa_server_backend_https;

proxy_cache off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Connection "close";

}

}

}- DWCx_HOSTNAME:DWCx_PORT

- is the address of the Dynamic Workload Console.

- MDMx_HOSTNAME:MDMx_PORT

- is the address of the master domain manager.