Building indexes by shard using multiple JVMs

When indexing takes too long, and further tuning does not seem to help, it could be that your server is approaching its physical limitations. To avoid such a condition, you can distribute the index across two or more Search servers, so that the indexing workload is also distributed. You can distribute your index across multiple Java Virtual Machines.

Before you begin

In order to perform index sharding with the Search server, first set up your shard environment using the following guidelines.

- Determine the number of shards that you will be using, based on the available capacity of your server. At least one CPU core's capacity must be available for each separate index shard.

- If you are using index replication, do not configure any of your index shards to participate in your index replication network. These shards are only used for index building. The final version of the index should be on the master server, which should then be replicated to the repeater and then to the subordinates.

- Allocate enough heap memory for each of your index shards. Refer to HCL Commerce Search performance tuning for recommendations on how to configure the solrconfig.xml configuration file.

About this task

To distribute an index, you divide it into partitions called shards. Shards can share the same

Search Docker container or be run separately in their own Search containers, depending on the

system's performance. If your Search shard containers and the Search master container are not

located in the same virtual machine or physical machine, you might need extra distributed file

system technology, such as remoteStorage, to help the Search shard containers share

an index folder with the master container.

Once all of the index shards are successfully populated, they can be merged in one optimized index, to be used with your storefront for sorting, faceting and filtering.

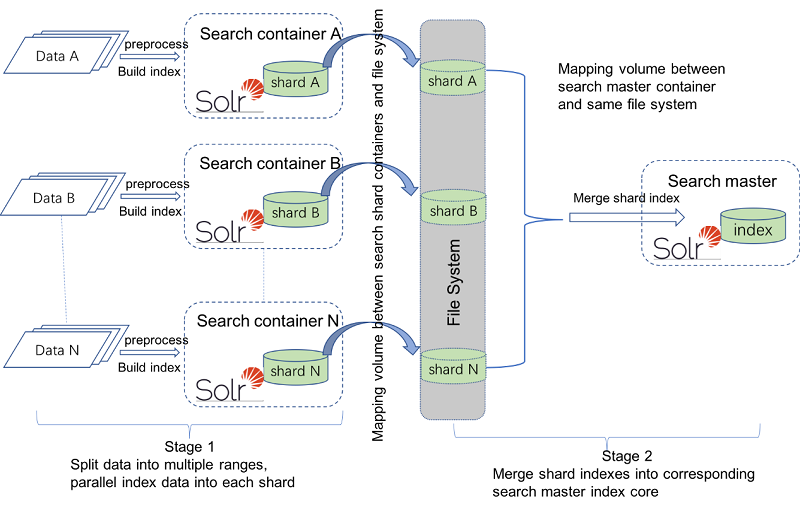

There are two stages to index sharding with multiple JVMs, as there are with a single JVM. In the first stage, the original data is split into multiple ranges. Each range of data is preprocessed and they are indexed into a shard’s index cores in parallel. In the second stage, all the shards’ index cores are merged into the Search master's corresponding index core. For example, CatalogEntry structured shard indexes are merged into the Search master CatalogEntry structured index, and unstructured shard indexes are merged into the Search master unstructured index core. For multiple JVMs, the standard approach is to build each shard index in a separate Search server container. If system resources permit, you can configure one Search server to build multiple shard indexes.

Some extra steps are needed to map volumes between containers and an outside file system. The Search master container needs this mapping so that it can access all shard index folders.

Once you have set up your shard environment, you can then perform indexing to each shard using the di-parallel-process utility. The following diagram shows the two stages for sharding in multiple search docker containers.

Procedure

- Copy docker-compose.yml to your development environment. Rename it docker-compose-shardingMultiJVMs.yml.

-

Run the following command to set up the environment:

If you have not changed the default settings in the file, this command creates three search shard servers, one search master server, one transaction server, one DB2 container and one utility container. Three search shard servers are mapped to different ports for each container. The ports aredocker-compose -f docker-compose-shardingMultiJVMs.yml up -dhttpport 3737, andhttpsport 3738.For the search shard servers, model your configuration on the following example:shard_a: image: search-app:latest hostname: search_shard_a environment: - WORKAREA=/shard_a - LICENSE=accept - TZ=Asia/Shanghai ports: - 3747:3737 - 3748:3738 volumes: - /shard_a depends_on: db: condition: service_healthy healthcheck: test: ["CMD", "curl", "-f", "-H", "Authorization: Basic ","http://localhost:3737/search/admin/resources/health/status?type=container"] interval: 20s timeout: 180s retries: 5Note: Set different hostname, external ports and external folders for different search shard containers.For the search master container:master: image: search-app:latest hostname: search_master environment: - SOLR_MASTER=true - WORKAREA=/search - LICENSE=accept - TZ=Asia/Shanghai ports: - 3737:3737 - 3738:3738 volumes_from: - shard_a:ro - shard_b:ro - shard_c:ro networks: default: aliases: - search depends_on: db: condition: service_healthy healthcheck: test: ["CMD", "curl", "-f", "-H", "Authorization: Basic ","http://localhost:3737/search/admin/resources/health/status?type=container"] interval: 20s timeout: 180s retries: 5Note: External volume mapping for search master node is configured for shard index folder, to allow master node access to all shard indexes. -

In the Utility server Docker container, modify

/opt/WebSphere/CommerceServer90/properties/parallelprocess/di-parallel-process.propertiesto match your environment. You can use the following examples as a guide.Configure the hostname and port for different shard servers as below.

Shard server name should be the same with the hostname/alias with docker compose file. b). configure shard index core directory as below:Shard.A.common.index-server-name=shard_a Shard.A.common.index-server-port=3738 … Shard.B.common.index-server-name=shard_b Shard.B.common.index-server-port=3738Shard.A.en_US.unstructured-index-core-dir=/shard_a/index/solr/MC_10001/en_US/Unstructured_A/ Shard.A.en_US.structured-index-core-dir=/shard_a/index/solr/MC_10001/en_US/CatalogEntry_A/ … Shard.B.en_US.unstructured-index-core-dir=/shard_b/index/solr/MC_10001/en_US/Unstructured_B/ Shard.B.en_US.structured-index-core-dir=/shard_b/index/solr/MC_10001/en_US/CatalogEntry_B/ Note: the directory should be absolute path inside each shard container.You can automate the shard configuration process. This is useful if, for example, you expect to create a large number of shards. The auto-sharding process will automatically configure properties such as preprocessing-start-range-value, preprocessing-end-range-value, index-core-name and index-core-dir.

For information on how to set up auto-sharding, see Sharding input properties file.

-

Change to the

/opt/WebSphere/CommerceServer90/bindirectory. -

Run the following command to do sharding in multiple JVMs.

For more information, see Running utilities from the Utility server Docker container../di-parallel-process.sh /opt/WebSphere/CommerceServer90/properties/parallelprocess/di-parallel-process.properties